Skip to navigation

Skip to navigation

Site Primary Navigation:

- About SDSC

- Services

- Support

- Research & Development

- Education & Training

- News & Events

Search The Site:

Published January 24, 2020

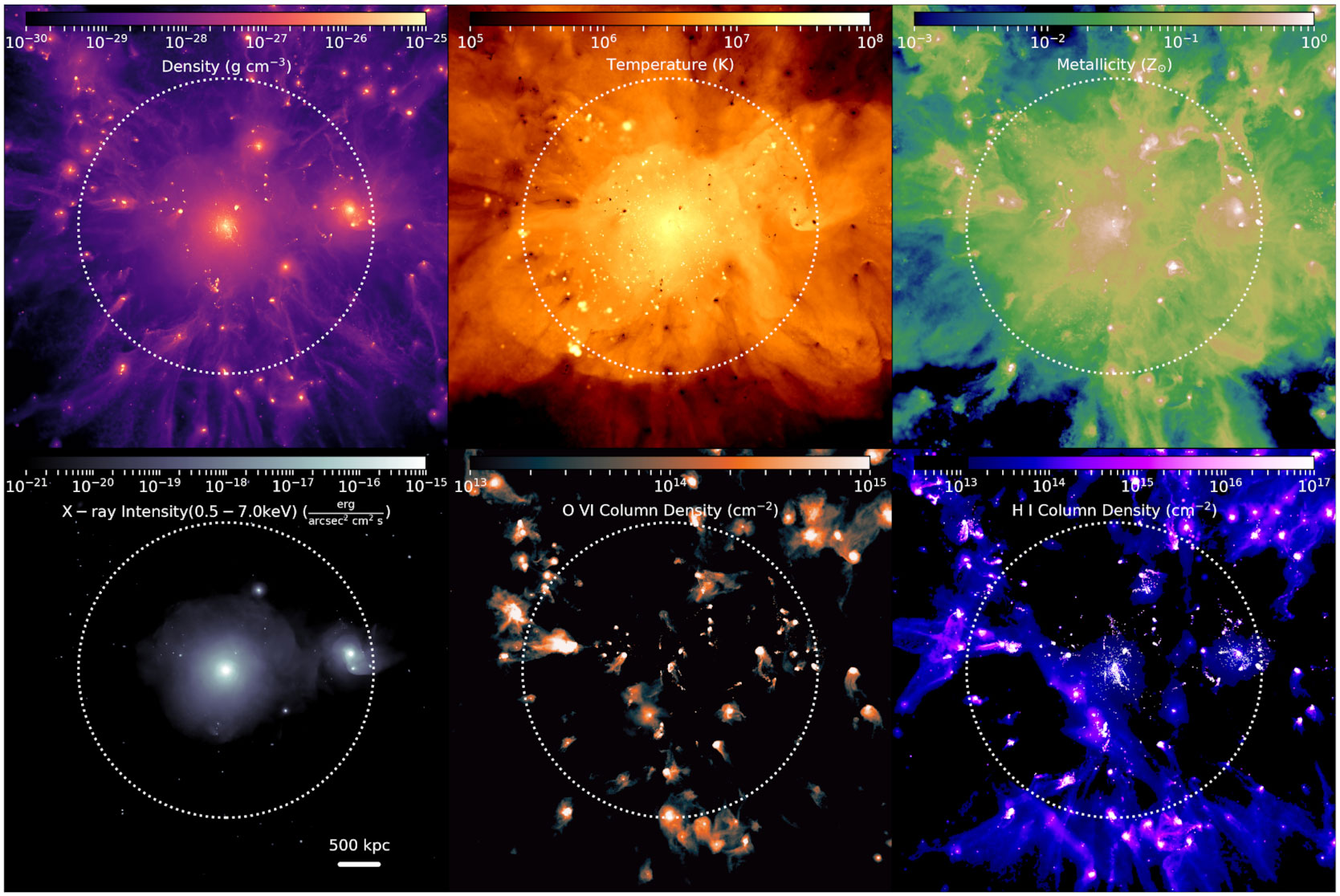

[Enlarge] A 5x5 megaparsec (~18.15 light years) snapshot of the RomulusC simulation at redshift z = 0.31. The top row shows density-weighted projections of gas density, temperature, and metallicity. The bottom row shows the integrated X-ray intensity, O VI column density, and H I column density. Credit: Iryna Butsky et al.

By Jorge Salazar (TACC) and Jan Zverina (SDSC)

Inspired by the science fiction of the spacefaring Romulans of Star Trek, astrophysicists have developed cosmological computer simulations called RomulusC, where the ‘C’ stands for galaxy cluster. With a focus on black hole physics, RomulusC has produced some of the highest resolution simulations ever of galaxy clusters, which can contain hundreds or even thousands of galaxies.

On Star Trek, the Romulans powered their spaceships with an artificial black hole. In reality, it turns out that black holes can drive the formation of stars and the evolution of whole galaxies. Studying such clusters is helping scientists map out the unknown universe.

The study, called ‘Ultraviolet Signatures of the Multiphase Intracluster and Circumgalactic Media in the RomulusC Simulation’ and published late last year in the Monthly Notices of the Royal Astronomical Society, explored the molecular gas within and surrounding the intracluster medium, which fills the space between galaxies in a galaxy cluster.

“We find that there’s a substantial amount of this cool-warm gas in galaxy clusters,” said study co-author Iryna Butsky, a PhD Student at the University of Washington. “We see that this cool-warm gas traces at extremely different and complementary structures compared to the hot gas. And we also predict that this cool-warm component can be observed now with existing instruments like the Hubble Space Telescope mass spectrograph.”

Scientists are just beginning to probe the intracluster medium, which is virtually invisible to optical telescopes. In particular, the cool-warm gas has proven difficult to probe even with X-rays. Scientists are using RomulusC to open up clusters with ultraviolet (UV) light from quasars shining through the gas with instruments such as the Cosmic Origins Spectrograph aboard the Hubble Space Telescope.

The research team applied a software tool called Trident, developed by Cameron Hummels of Caltech and colleagues. It takes the synthetic absorption line spectra and adds a bit of noise and instrument quirks known about the Hubble Space Telescope.

“The end result is a very realistic looking spectrum that we can directly compare to existing observations,” said Butsky. “But, what we can’t do with observations is reconstruct three-dimensional information from a one-dimensional spectrum. That’s what’s bridging the gap between observations and simulations.”

Researchers used the Comet supercomputer at the San Diego Supercomputer Center (SCSC) at UC San Diego, the Stampede2 system at the Texas Advanced Computing Center (TACC), Blue Waters at the National Center for Supercomputing Applications (NCSA) and NASA’s Pleiades system.

SDSC’s petascale Comet supercomputer fills a particular niche, according to study co-author Tom Quinn, a professor of astronomy at the University of Washington.

“It has large-memory nodes available,” he said. “Particular aspects of the analysis, for example identifying the galaxies, is not easily done on a distributed-memory machine. Having the large shared memory machine available was very beneficial. In a sense, we didn’t have to completely parallelize that particular aspect of the analysis. That’s the main thing, having the big data machine.”

Said Butsky: “What I think is really cool about using supercomputers to model the universe is that they play a unique role in allowing us to do experiments. In many of the other sciences, you have a lab where you can test your theories. But in astronomy, you can come up with a pen and paper theory and observe the universe as it is. But without simulations, it’s very hard to actually run these tests because it’s hard to reproduce some of the extreme phenomena in space, like temporal scales and getting the temperatures and densities of some of these extreme objects. Simulations are extremely important in being able to make progress in theoretical work.”

Additional co-authors of the study, funded by the National Science Foundation and NASA, include Jessica K. Werk (University of Washington); Joseph N. Burchett (UC Santa Cruz), and Daisuke Nagai and Michael Tremmel (Yale University).

About SDSC

As an Organized Research Unit of UC San Diego, SDSC is considered a leader in data-intensive computing and cyberinfrastructure, providing resources, services, and expertise to the national research community, including industry and academia. Cyberinfrastructure refers to an accessible, integrated network of computer-based resources and expertise, focused on accelerating scientific inquiry and discovery. SDSC supports hundreds of multidisciplinary programs spanning a wide variety of domains, from earth sciences and biology to astrophysics, bioinformatics, and health IT. SDSC’s petascale Comet supercomputer is a key resource within the National Science Foundation’s XSEDE (Extreme Science and Engineering Discovery Environment) program.

Share